1.What is the necessity of Testing

2. What is Software Testing?

Testing & Debugging:

Testing and debugging are different. Executing tests can show failures that are caused by defects in the software.

Debugging is the development activity that finds, analyzes, and fixes such defects. Subsequent confirmation testing checks whether the fixes resolved the defects. In some cases, testers are responsible for the initial test and the final confirmation test, while developers do the debugging and associated component testing. However, in Agile development and in some other lifecycles, testers may be involved in debugging and component testing.

Debugging: The process of finding, analyzing and removing the causes of failures in software.

Software testing is an organizational process within software development in which business-critical software is verified for correctness, quality, and performance. Software testing is used to ensure that expected business systems and product features behave correctly as expected.

Software testing may either be a manual or an automated process.

- Manual software testing testing of the software where tests are executed manually by a QA Analyst. It is performed to discover bugs in software under development.

In Manual testing, the tester checks all the essential features of the given application or software. In this process, the software testers execute the test cases and generate the test reports without the help of any automation software testing tools.

- Automated software testing :In Automated Software Testing, testers write code/test scripts to automate test execution. Testers use appropriate automation tools to develop the test scripts and validate the software. The goal is to complete test execution in a less amount of time.

- Automated testing allows you to execute repetitive task and regression test without the intervention of manual tester. Even though all processes are performed automatically, automation requires some manual effort to create initial testing scripts

KEY DIFFERENCE

- Manual Testing is done manually by QA analyst (Human) whereas Automation Testing is done with the use of script, code and automation tools (computer) by a tester.

- Manual Testing process is not accurate because of the possibilities of human errors whereas the Automation process is reliable because it is code and script based.

- Manual Testing is a time-consuming process whereas Automation Testing is very fast.

- Manual Testing is possible without programming knowledge whereas Automation Testing is not possible without programming knowledge.

- Manual Testing allows random Testing whereas Automation Testing doesn’t allow random Testing.

- ------------------------------------------------------------------------

Defect:

- Definition: A defect is a deviation from the expected behavior or specification in the software application.

- Example: Suppose a login form should validate the password length, but it fails to do so, allowing users to set a password shorter than the specified minimum length.

Bug:

- Definition: A bug is a coding error that causes a defect in the software.

- Example: In a banking application, a bug in the code might result in incorrect calculations of interest rates, leading to financial discrepancies.

Error:

- Definition: An error is a human action that produces an incorrect or unexpected result.

- Example: During data entry, a user accidentally enters a negative value for a quantity, causing errors in subsequent calculations.

Fault:

- Definition: A fault is a defect in the software that may or may not result in failure.

- Example: In an e-commerce application, a fault in the payment processing module may lead to occasional payment failures for certain users.

Failure:

- Definition: A failure occurs when the software does not perform as expected and deviates from its intended behavior.

- Example: A failure could be the inability of a messaging app to send messages during peak hours due to a server overload.

3.Testing principles:

1.Testing shows presence of mistakes. Testing is aimed at detecting the defects within a piece of software. But no matter how thoroughly the product is tested, we can never be 100 percent sure that there are no defects. We can only use testing to reduce the number of unfound issues.

2.Exhaustive testing is impossible. There is no way to test all combinations of data inputs, scenarios, and preconditions within an application. For example, if a single app screen contains 10 input fields with 3 possible value options each, this means to cover all possible combinations, test engineers would need to create 59,049 (310) test scenarios. And what if the app contains 50+ of such screens? In order not to spend weeks creating millions of such less possible scenarios, it is better to focus on potentially more significant ones.

Exhaustive Testing: This is an impossible goal that can't be achieved

3.Early testing. As mentioned above, the cost of an error grows exponentially throughout the stages of the SDLC. Therefore it is important to start testing the software as soon as possible so that the detected issues are resolved and do not snowball.

4.Defect clustering. This principle is often referred to as an application of the Pareto principle to software testing. This means that approximately 80 percent of all errors are usually found in only 20 percent of the system modules. Therefore, if a defect is found in a particular module of a software program, the chances are there might be other defects. That is why it makes sense to test that area of the product thoroughly.

5.Pesticide paradox. Running the same set of tests again and again won’t help you find more issues. As soon as the detected errors are fixed, these test scenarios become useless. Therefore, it is important to review and update the tests regularly in order to adapt and potentially find more errors.

6.Testing is context dependent. Depending on their purpose or industry, different applications should be tested differently. While safety could be of primary importance for a fintech product, it is less important for a corporate website. The latter, in its turn, puts an emphasis on usability and speed.

7.Absence-of-errors fallacy. The complete absence of errors in your product does not necessarily mean its success. No matter how much time you have spent polishing your code or improving the functionality if your product is not useful or does not meet the user expectations it won’t be adopted by the target audience.

While the above-listed principles are undisputed guidelines for every software testing professional, there are more aspects to consider. Some sources note other principles in addition to the basic ones:

- Testing must be an independent process handled by unbiased professionals.

- Test for invalid and unexpected input values as well as valid and expected ones.

- Testing should be performed only on a static piece of software (no changes should be made in the process of testing).

- Use exhaustive and comprehensive documentation to define the expected test results.

Typical Objectives of Testing:

For any given project, the objectives of testing may include:

-To evaluate work products such as requirements, user stories, design, and code

-To verify whether all specified requirements have been fulfilled

-To validate whether the test object is complete and works as the users and other stakeholders expect

-To build confidence in the level of quality of the test object

-To prevent defects

-To find failures and defects

--To provide sufficient information to stakeholders to allow them to make informed decisions, especially regarding the level of quality of the test object

-To reduce the level of risk of inadequate software quality (e.g., previously undetected failures occurring in operation)

-To comply with contractual, legal, or regulatory requirements or standards, and/or to verify the test object’s compliance with such requirements or standards

The objectives of testing can vary, depending upon the context of the component or system being tested, the test level, and the software development lifecycle model.

4.Why is software testing?

5.What are some recent major computer system failures caused by

software bugs?

- In

March of 2012 the Initial Public Offering of the stock of a new stock

exchange was cancelled due to software bugs in their trading platform that

interfered with trading in stocks including their own IPO stock, according

to media reports. The high-speed trading platform reportedly was already

handling more than 10 percent of all trading in U.S. securites, but the

procesing of initial IPO trading was new for the system, and though it had

undergone testing, it was unable to properly handle the IPO initial

trades. The problem also briefly affected trading of other stocks and

other stock exchanges.

- It

was reported that software problems in an automated highway toll charging

system caused erroneous charges to thousands of customers in a short

period of time in December 2011.

- A

U.S. county found that their state's computer software assigned thousands

of voters to invalid voting locations in November 2011 for an upcoming

election due to the system's problems accepting new voting district

boundary information.

- In

August 2011, a major North American retailer initiated its own online

e-commerce website, after contracting it out for many years. It was

reported that within the first few months the site crashed six times, home

page links were found not to work, gift registries were reported not

working properly, and the online division's president left the company.

- A

new U.S.-government-run credit card complaint handling system was not

working correctly according to August 2011 news reports. Banks were

required to respond to complaints routed to them from the system, but due

to system bugs the complaints were not consistently being routed to

companies as expected. Reportedly the system had not been properly tested.

- News

reports in Asia in July of 2011 reported that software bugs in a national

computerized testing and grading system resulted in incorrect test results

for tens of thousands of high school students. The national education

ministry had to reissue grade reports to nearly 2 million students

nationwide.

- In

April of 2011 bugs were found in popular smartphone software that resulted

in long-term data storage on the phone that could be utilized in location

tracking of the phone, even when it was believed that locator services in

the phone were turned off. A software update was released several weeks

later which was expected to resolve the issues.

- Software

problems in a new software upgrade for farecards in a major urban transit

system reportedly resulted in a loss of a half million dollars before the

software was fixed, according to October 2010 news reports.

6.Why does software have bugs?

- miscommunication

or no communication - as to specifics of what an application should or

shouldn't do (the application's requirements).

- software

complexity - the complexity of current software applications can be

difficult to comprehend for anyone without experience in modern-day

software development. Multi-tier distributed systems, applications

utilizing multiple local and remote web services applications, data

communications, enormous relational databases, security complexities, and

sheer size of applications have all contributed to the exponential growth

in software/system complexity.

- programming

errors - programmers, like anyone else, can make mistakes.

- changing

requirements (whether documented or undocumented) - the end-user may not

understand the effects of changes, or may understand and request them

anyway - redesign, rescheduling of engineers, effects on other projects,

work already completed that may have to be redone or thrown out, hardware

requirements that may be affected, etc. If there are many minor changes or

any major changes, known and unknown dependencies among parts of the

project are likely to interact and cause problems, and the complexity of

coordinating changes may result in errors. Enthusiasm of engineering staff

may be affected. In some fast-changing business environments, continuously

modified requirements may be a fact of life. In this case, management must

understand the resulting risks, and QA and test engineers must adapt and

plan for continuous extensive testing to keep the inevitable bugs from

running out of control - see'What can be done if requirements are changing

continuously?' in the LFAQ. Also see information

about 'agile' approaches such as XP, in

Part 2 of the FAQ.

- time

pressures - scheduling of software projects is difficult at best, often

requiring a lot of guesswork. When deadlines loom and the crunch comes,

mistakes will be made.

- egos

- people prefer to say things like:

- poorly

documented code - it's tough to maintain and modify code that is badly

written or poorly documented; the result is bugs. In many organizations

management provides no incentive for programmers to document their code or

write clear, understandable, maintainable code. In fact, it's usually the

opposite: they get points mostly for quickly turning out code, and there's

job security if nobody else can understand it ('if it was hard to write,

it should be hard to read').

- software

development tools - visual tools, class libraries, compilers, scripting

tools, etc. often introduce their own bugs or are poorly documented,

resulting in added bugs.

7.How exactly Testing is different from QA/QC?

Quality

Assurance

|

Quality

Control

|

Its Preventive in nature

|

Its Detective in nature

|

Helps

establish process

|

Relates

to specific product or service.

|

Sets

up measurement programs to evaluate process.

|

Verifies

specific attributes are there or not in product/service.

|

Identifies

weaknesses in process and improves them.

|

Identifies

for correcting defects.

|

Management

responsibility, frequently performed by staff function.

|

Responsibility

of team/worker

|

Concerned

with all products produced by the process

|

Concerned

with specific product.

|

Is

a Quality Control over Quality Control activity?

|

Verification typically involves reviews and meetings to evaluate documents, plans, code, requirements, and specifications. This can be done with checklists, issues lists, walkthroughs, and inspection meetings.

A 'walkthrough' is an informal meeting for evaluation or informational purposes. Little or no preparation is usually required.

An inspection is more formalized than a 'walkthrough', typically with 3-8 people including a moderator, reader, and a recorder to take notes. The subject of the inspection is typically a document such as a requirements spec or a test plan, and the purpose is to find problems and see what's missing, not to fix anything. Attendees should prepare for this type of meeting by reading thru the document; most problems will be found during this preparation. The result of the inspection meeting should be a written report. Thorough preparation for inspections is difficult, painstaking work, but is one of the most cost effective methods of ensuring quality.

Quality software is reasonably bug-free, delivered on time and within budget, meets requirements and/or expectations, and is maintainable.

Verification

|

Validation

|

·

Verifying process

includes checking documents, design, code and program

|

·

It is a dynamic

mechanism of testing and validating the actual product

|

·

It does not involve executing the code

|

·

It always involves

executing the code

|

·

Verification uses

methods like reviews, walkthroughs, inspections and desk- checking etc.

|

·

It uses methods like

black box testing ,white box testing ,greyboxtesting and non-functional

testing

|

·

Whether the

software conforms to specification is checked

|

·

It checks whether

software meets the requirements and expectations of customer

|

·

It finds bugs early

in the development cycle

|

·

It can find bugs

that the verification process can not catch

|

·

Target is

application and software architecture, specification, complete design, high

level and data base design etc.

|

·

Target is actual

product

|

·

QA team does

verification and make sure that the software is as per the requirement in the

SRS document.

|

·

With the involvement

of testing team validation is executed on software code.

|

·

It comes before

validation

|

·

It comes after

verification

|

Verification and Validation: Differences in Functions

The verification and validation are the main aims of the workbench concept, but it is important to know the difference between them to outline all the specific elements of each process clearly:

Verification

- Checks program, documents, and design.

- Reviews, desk-checking, walkthroughs, and inspection methods.

- Check of accordance with the specified requirements.

- Bug detection is performed on the cycle of early development.

- It precedes the validation.

Validation

- It is a process of testing and validating the real product.

- It uses non-functional testing, Black Box Testing, and White Box Testing.

- Checks whether the software is in compliance with customers’ expectations.

- It can detect bugs, which are missed by verification.

- It is performed when the verification is done.

We can conclude, that verification is conducted at the initial stage before validation and verifies the input requirements. The validation checks the examination performed by verification and detects the missed issues to make the real product closer to the definition of done.

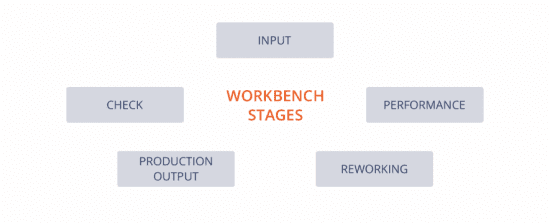

Workbench Concept: Goals and Stages

This is a method which aims to examine and verify the structure of testing performance by detailed documenting. Workbench process has its common stages and steps which serve for different test assignments. The common stages of each workbench include:

Input. It is the initial workbench stage. Each certain assignment should contain its initial and outcome (input and output) requirements to know the available parameters and expected results. Each workbench has its specific inputs depending on the type of product under testing.

Performance. The priority aim of the entire testing is in the transformation of the initial parameters to outcome requirements and reach the prescribed results.

Check. It is an examination of output parameters after the performance phase to verify its accordance with the expected ones.

Production output. It is the final stage of a workbench in case the check confirmed the properly conducted performance.

Reworking. If the outcome parameters are not in compliance with the desired result, it is necessary to return to the performance phase and conduct it from the beginning.

SDLC: software development life cycle:

A framework that describes the activities performed at each stage of a software development project :

Feasibility Study

|

|

|

|

|

- This is the longest phase.

- This phase consists of Front end + Middle ware + Back-end

- In front end: development coding are done even SEO[Searh Engine Optimization] setting are done. front will be done using HTML5,javascript & CSS

- In Middle ware: They connect both front end and back end. The middleware is done using Java,C#,Python, Ruby,C++ etc..

- In back-end: database is created. Backend will be created using different databases MYSQL, Oracle, MangoDB, Microsfot SQL Server etc..

After successful testing the product is delivered/deployed to the client, even client are trained how to use the product.

Once the product has been delivered to the client a task of maintenance start as when the client will come up with an error the issue should be fixed from time to time.

Software Testing Life Cycle or Testing Process:

Test Design: The activity of deriving and specifying test cases from test conditions.

4)review the testcase document

In false positive, the tester finds a defect which is not a defect. For example, the tester was testing the software and the website didn't load because the internet connection is dropped, he reported it as a defect.

In false negative, there is a defect in the software but the tester didn't find it. For example, the tester executed all the test cases for the mobile app on portrait mode but there are undiscovered defects that don't appear unless he uses the app in landscape mode, he didn't report those defects.

False negatives are tests that do not detect defects that they should have detected; false positives are reported as defects, but aren’t actually defects.

A developer finds and fixes a defect: Fixing the defect is debugging no matter who found the defect

i. Delaying the release date because the UAT build is not ready yet

-This is a control activity because here we applied a corrective action to get a test project on track.

ii. Calculating the number of test cases executed during the last iteration

-This is a monitoring activity because here we are checking the status of testing activities.

iii. Holding a retrospective meeting at the end of an iteration

-This is a monitoring activity because here we are checking the status of testing activities. The retrospective meeting deals with testing & non-testing issues, but that doesn't mean that it is not a test monitoring activity.

iv. Changing the story points of a user story from 5 to 20 because a new risk has been identified

-This is a control activity because here we applied a corrective action to get a project on track. Changing the story points is mainly not the responsibility of the tester but if we consider it as a reviewing activity, it should be a control activity.

v. Looking at the burn-down chart and analyzing points where the team was off-track

-This is a monitoring activity because here we are checking the status of testing activities. The burn-down chart deals with testing & non-testing issues, but that doesn't mean that looking at it is not a test monitoring activity.

==========================

Difference between Windows And Web Based Applications:

===============================================

TEST LEVELS:

1. Development Environment: Used for writing and testing code, typically on a developer's local machine.

2. Integration Environment: Integrates different software components, ensures they work together.

3. Staging Environment: Pre-production environment, mimics production for final testing.

4. User Acceptance Testing (UAT) Environment: End-users test software for requirements, mirrors production closely.

5. Production Environment: Live environment for end-users, where software is used as intended.

6. Performance Testing Environment: Tests software under load, matches production hardware.

7. Security Testing Environment: Focuses on software security, uses security tools and configurations.

8. Sandbox or Experimental Environment: For experimental and exploratory testing, allows trying new ideas safely.

Unit Testing: Unit Testing involves verification of individual components or units of source code. A unit can be referred to as the smallest testable part of any software. It focuses on testing the functionality of individual components within the application. It is often used by developers to discover bugs in the early stages of the development cycle. Developers will perform the unit testing on dev environment.

A unit test case would be as fundamental as clicking a button on a web page and verifying whether it performs the desired operation. For example, ensuring that a share button on a webpage lets you share the correct page link.

Unit testing Example – The battery is checked for its life, capacity and other parameters. Sim card is checked for its activation.

Component Testing

Testing a module or component independently to verify its expected output is called component testing. Generally, component testing is done to verify the functionality and/or usability of a component but not restricted to only these. A component can be of anything which can take input(s) and delivers some output. For example, the module of code, web page, screens and even a system inside a bigger system is a component to it.

From the above picture, Let’s see what all we can test in component 1 (login) separately:

- Testing the UI part for usability and accessibility

- Testing the Page loading to ensure performance

- Testing the login functionality with valid and invalid user credentials

- Integration testing: Integration testing is the next step after component testing. Multiple components[Modules] are integrated as a single unit then perform the testing to check the data flow is happening from one module to other modules. For example, testing a series of webpages in a particular order to verify interoperability.

This approach helps QAs evaluate how several components of the application work together to provide the desired result. Performing integration testing in parallel with development allows developers to detect and locate bugs faster.

STUB

|

DRIVER

|

1.Temporary Program is used instead of Sub-Programs which are

under construction

2.Used in top down approach

3.Other name is “Called Programs”

4,returns the control to the Main program

|

1.Temporary Program used instead of main Program, which is under

construction

2.Used in Bottom Up approach

3. other name is “Calling Programs”

|

testing user experience and more.

Teams perform several types of system testing like regression testing, stress testing, functional testing and more, depending on their access to time and resources.

Example:

Let us understand these three types of testing with an oversimplified example.

E.g. For a functional mobile phone, the main parts required are “battery” and “sim card”.

Functional Testing Example – The functionality of a mobile phone is checked in terms of its features and battery usage as well as sim card facilities.

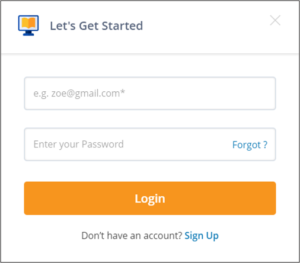

Almost every web application requires its users/customers to log in. For that, every application has to have a “Login” page which has these elements:

- Account/Username

- Password

- Login/Sign in Button

For Unit Testing, the following may be the test cases:

- Field length – username and password fields.

- Input field values should be valid.

- The login button is enabled only after valid values (Format and lengthwise) are entered in both the fields.

For Integration Testing, the following may be the test cases:

- The user sees the welcome message after entering valid values and pushing the login button.

- The user should be navigated to the welcome page or home page after valid entry and clicking the Login button.

Now, after unit and integration testing are done, let us see the additional test cases that are considered for functional testing:

- The expected behavior is checked, i.e. is the user able to log in by clicking the login button after entering a valid username and password values.

- Is there a welcome message that is to appear after a successful login?

- Is there an error message that should appear on an invalid login?

- Are there any stored site cookies for login fields?

- Can an inactivated user log in?

- Is there any ‘forgot password’ link for the users who have forgotten their passwords?

Almost every web application requires its users/customers to log in. For that, every application has to have a “Login” page which has these elements:

Acceptance testing is performed both internally and externally.

Internal acceptance testing (also known as alpha testing) is performed by the members within the organization.

External testing (also known as the beta testing) is performed by a limited number of actual end-users. This approach helps teams evaluate how well the product satisfies the user’s standards. It also identifies bugs in the last stage before releasing a product.

TestPlan:

Test Plan

|

Test Strategy

|

·

A test plan for software project can be defined as a document

that defines the scope, objective, approach and emphasis on a software

testing effort

|

·

Test strategy is a set of guidelines that explains test design

and determines how testing needs to be done

|

·

Components of Test plan include- Test plan id, features to be

tested, test techniques, testing tasks, features pass or fail criteria, test

deliverables, responsibilities, and schedule, etc.

|

·

Components of Test strategy includes- objectives and scope,

documentation formats, test processes, team reporting structure, client

communication strategy, etc.

|

·

Test plan is carried out by a testing manager or lead that

describes how to test, when to test, who will test and what to test

|

·

A test strategy is carried out by the project manager. It says

what type of technique to follow and which module to test

|

·

Test plan narrates about the specification

|

·

Test strategy narrates about the general approaches

|

·

Test plan can change

|

·

Test strategy cannot be changed

|

·

Test planning is

done to determine possible issues and dependencies in order to identify the

risks.

|

·

It is a long-term plan of action.You can abstract information

that is not project specific and put it into test approach

|

·

A test plan exists individually

|

·

In smaller project, test strategy is often found as a section of

a test plan

|

·

It is defined at project level

|

·

It is set at organization level and can be used by multiple

projects

|

TESTING

TECHNIQUES:

Example: A simple example of black-box testing is a TV (Television). As a user, we watch the TV but we don’t need the knowledge of how the TV is built and how it works, etc. We just need to know how to operate the remote control to switch on, switch off, change channels, increase/decrease volume, etc.

In this example, The TV is your AUT (Application Under Test).

The remote control is the User Interface (UI) that you use to test.

You just need to know how to use the application.

- Tester can be non-technical.

- Used to verify contradictions in actual system and the specifications.

- Test cases can be designed as soon as the functional specifications are complete

- The test inputs needs to be taken from large sample space.

- It is difficult to identify all possible inputs in limited testing time. So writing test cases is slow and difficult

- Chances of having unidentified paths during this testing

🌟 Valid test data. It is necessary to verify whether the system functions are in compliance with the requirements, and the system processes and stores the data as intended.

🌟 Invalid test data. QA engineers should inspect whether the software correctly processes invalid values, shows the relevant messages, and notifies the user that the data are improper.

🌟 Boundary test data.

🌟 Wrong data.

🌟 Absent data.

For each piece of specification, generate one or more equivalence class.Label the classes as “valid” or “invalid”Generate one test case for each invalid equivalence classGenerate a test case that covers as many valid equivalence classes as possibleAn inputbox accepts 50 characters1 to 1011 to 2021->30 31->40 41à50Dividing the input range into equivalence classes is called ECP

2. Boundary value analysis(BVA):Testing the application inputbox/textbox/editbox field at the boundary values(in,at,outside) is called boundary value analysisGenerate test cases for the boundary valuesMinimum value, minval+1, minval-1Maximum value, maxval+1, maxval-1Examples:Password should accept 6 to 12 charactersMinvalue=6 ,min+1à6+1=7,min-1à6-1=5Maxvalue=12 max+1=13 max-1=11Without any characters(empty) test the password fieldTest password should display in encrypted format(*,.)Enter special characters : ~!@#$%^&*,.;”’?><

password field accepts 6 to 12 characters

password should contain atleast one uppercase letter

password should contain atleast one number

password should contain atleast one special character

testcase designing:

verify entering invalid password less than 6 characters

verify entering invalid password more than 12 characters

verify leaving the password field blank

verify entering valid data in the password field(6 char/12/in between 6 & 12)

verify entering only numbers in password field

verify entering only alphabets in password field

verify entering only special characters in password field

verify entering only alphanumerics in password field

verify entering alphabets and special characters in password field

verify entering numbers and special characters in password field

verify entering without uppercase letter in the password field

Task 2)

Write testcases for below requirements

Password: • must contain 6-12 characters • must contain both upper and lower case characters • must contain numeric characters • must contain special characters • should not contain username or firstname or lastname |

mobile number

847 987 4279

verify entering 10 numbers in the mobile number field

verify entering more than 10

verify entering less than 10

verify leaving mobile number field blank

Boundary Value Testing

This type of testing checks the behavior of the application at boundary level.

Boundary Value Testing is performed to check if defects exist at boundary values. Boundary Value Testing is used for testing a different range of numbers. There is an upper and lower boundary for each range and testing is performed on these boundary values.

If testing requires a test range of numbers from 1 to 500 then Boundary Value Testing is performed on values at 0, 1, 2, 499, 500 and 501.

What is Decision Table in Software Testing?

Why is Decision Table Important?

- Decision tables are very much helpful in test design technique.

- It helps testers to search the effects of combinations of different inputs and other software states that implement business rules.

- It provides a regular way of stating complex business rules which benefits the developers as well as the testers.

- It assists in the development process with the developer to do a better job. Testing with all combination might be impractical.

- It the most preferable choice for testing and requirements management.

- It is also used in model complicated logic.

Advantages of Decision Table in Software Testing

- Any complex business flow can be easily converted into the test scenarios & test cases using this technique.

- Decision tables work iteratively. Therefore, the table created at the first iteration is used as the input table for the next tables. The iteration is done only if the initial table is not satisfactory.

- Simple to understand and everyone can use this method to design the test scenarios & test cases.

- These tables guarantee that we consider every possible combination of condition values. This is known as its completeness property.

Way to use Decision Table: Example

| Conditions | Rule 1 | Rule 2 | Rule 3 | Rule 4 |

Username

|

F

|

T

|

F

|

T

|

Password

|

F

|

F

|

T

|

T

|

Output

|

E

|

E

|

E

|

H

|

- T – Correct username/password

- F – Wrong username/password

- E – Error message is displayed

- H – Home screen is displayed

- Case 1 – Username and password both were wrong. The user is shown an error message.

- Case 2 – Username was correct, but the password was wrong. The user is shown an error message.

- Case 3 – Username was wrong, but the password was correct. The user is shown an error message.

- Case 4 – Username and password both were correct, and the user is navigated to the homepage.

- The first test case should be very much sensible to enter the correct PIN at the first time

- The second test should be to enter an incorrect PIN each time, so that the system rejects the card

- To test all transition, firstly do the testing where the PIN was incorrect the first time but OK the second time and another test where the PIN was correct on the third try

- But, these tests are basically less important than the first two tests

Example: A Car mechanic should know the internal structure of the car engine to repair it.

In this example,

CAR is the AUT (Application Under Test).

The user is the black box tester.

The mechanic is the white box tester.

To ensure:

- That all independent paths

within a module have been exercised at least once.

- All logical decisions verified

on their true and false values.

- All loops executed at their

boundaries and within their operational bounds internal data structures

validity.

To discover the following types of bugs:

- Logical error tend to creep

into our work when we design and implement functions, conditions or

controls that are out of the program

- The design errors due to

difference between logical flow of the program and the actual

implementation

- Typographical errors and syntax

checking

We need to write test cases that ensure the complete coverage of the program logic.

For this we need to know the program well i.e. We should know the specification and the code to be tested. Knowledge of programming languages and logic.

An introduction to code coverage

Code coverage is a metric that can help you understand how much of your source is tested. It's a very useful metric that can help you assess the quality of your test suite, and we will see here how you can get started with your projects.

How is code coverage calculated?

Code coverage tools will use one or more criteria to determine how your code was exercised or not during the execution of your test suite. The common metrics that you might see mentioned in your coverage reports include:

- Function coverage: how many of the functions defined have been called.

- Statement coverage: how many of the statements in the program have been executed.

- Branches coverage: how many of the branches of the control structures (if statements for instance) have been executed.

- Condition coverage: how many of the boolean sub-expressions have been tested for a true and a false value.

- Line coverage: how many of lines of source code have been tested.

These metrics are usually represented as the number of items actually tested, the items found in your code, and a coverage percentage (items tested / items found).

These metrics are related, but distinct. In the trivial script below, we have a Javascript function checking whether or not an argument is a multiple of 10. We'll use that function later to check whether or not 100 is a multiple of 10. It'll help understand the difference between the function coverage and branch coverage.

function isMultipleOf10(x) { if (x % 10 == 0) return true; else return false; } console.log(isMultipleOf10(100));

We can use the coverage tool istanbul to see how much of our code is executed when we run this script. After running the coverage tool we get a coverage report showing our coverage metrics. We can see that while our Function Coverage is 100%, our Branch Coverage is only 50%. We can also see that the isntanbul code coverage tool isn't calculating a Condition Coverage metric.

This is because when we run our script, the else statement has not been executed. If we wanted to get 100% coverage, we could simply add another line, essentially another test, to make sure that all branches of the if statement is used

function isMultipleOf10(x) { if (x % 10 == 0) return true; else return false; } console.log(isMultipleOf10(100)); console.log(isMultipleOf10(34)); // This will make our code execute the "return false;" statement.

In this example, we were just logging results in the terminal but the same principal applies when you run your test suite. Your code coverage tool will monitor the execution of your test suite and tell you how much of the statements, branches, functions and lines were run as part of your tests

Find the right tool for your project

You might find several options to create coverage reports depending on the language(s) you use. Some of the popular tools are listed below:

- Java: Atlassian Clover, Cobertura, JaCoCo

- Javascript: istanbul, Blanket.js

- PHP: PHPUnit

- Python: Coverage.py

- Ruby: SimpleCov

What percentage of coverage should you aim for?

There's no silver bullet in code coverage, and a high percentage of coverage could still be problematic if critical parts of the application are not being tested, or if the existing tests are not robust enough to properly capture failures upfront. With that being said it is generally accepted that 80% coverage is a good goal to aim for. Trying to reach a higher coverage might turn out to be costly, while not necessary producing enough benefit

Use coverage reports to identify critical misses in testing

Soon you'll have so many tests in your code that it will be impossible for you to know what part of the application is checked during the execution of your test suite. You'll know what breaks when you get a red build, but it'll be hard for you to understand what components have passed the tests.

This is where the coverage reports can provide actionable guidance for your team. Most tools will allow you to dig into the coverage reports to see the actual items that weren't covered by tests and then use that to identify critical parts of your application that still need to be tested.

Good coverage does not equal good tests

Getting a great testing culture starts by getting your team to understand how the application is supposed to behave when someone uses it properly, but also when someone tries to break it. Code coverage tools can help you understand where you should focus your attention next, but they won't tell you if your existing tests are robust enough for unexpected behaviors.

Achieving great coverage is an excellent goal, but it should be paired with having a robust test suite that can ensure that individual classes are not broken as well as verify the integrity of the system.

Difference Between Black Box And White Box Testing

| S.No | Black Box Testing | White Box Testing |

|---|---|---|

| 1 | The main objective of this testing is to test the Functionality / Behavior of the application. | The main objective is to test the infrastructure of the application. |

| 2 | This can be performed by a tester without any coding knowledge of the AUT (Application Under Test). | Tester should have the knowledge of internal structure and how it works. |

| 3 | Testing can be performed only using the GUI. | Testing can be done at an early stage before the GUI gets ready. |

| 4 | This testing cannot cover all possible inputs. | This testing is more thorough as it can test each path. |

| 5 | Some test techniques include Boundary Value Analysis, Equivalence Partitioning, Error Guessing etc. | Some testing techniques include Conditional Testing, Data Flow Testing, Loop Testing etc. |

| 6 | Test cases should be written based on the Requirement Specification. | Test cases should be written based on the Detailed Design Document. |

| 7 | Test cases will have more details about input conditions, test steps, expected results and test data. | Test cases will be simple with the details of the technical concepts like statements, code coverage etc. |

| 8 | This is performed by professional Software Testers. | This is the responsibility of the Software Developers. |

| 9 | Programming and implementation knowledge is not required. | Programming and implementation knowledge is required. |

| 10 | Mainly used in higher level testing like Acceptance Testing, System Testing etc. | Is mainly used in the lower levels of testing like Unit Testing and Integration Testing. |

| 11 | This is less time consuming and exhaustive. | This is more time consuming and exhaustive. |

| 12 | Test data will have wide possibilities so it will be tough to identify the correct data. | It is easy to identify the test data as only a specific part of the functionality is focused at a time. |

| 13 | Main focus of the tester is on how the application is working. | Main focus will be on how the application is built. |

| 14 | Test coverage is less as it cannot create test data for all scenarios. | Almost all the paths/application flow are covered as it is easy to test in parts. |

| 15 | Code related errors cannot be identified or technical errors cannot be identified. | Helps to identify the hidden errors and helps in optimizing code. |

| 16 | Defects are identified once the basic code is developed. | Early defect detection is possible. |

| 17 | User should be able to identify any missing functionalities as the scope of this testing is wide. | Tester cannot identify the missing functionalities as the scope is limited only to the implemented feature. |

| 18 | Code access is not required. | Code access is required. |

| 19 | Test coverage will be less as the tester has limited knowledge about the technical aspects. | Test coverage will be more as the testers will have more knowledge about the technical concepts. |

| 20 | Professional tester focus is on how the entire application is working. | Tester/Developer focus is to check whether the particular path is working or not. |

Test Case Design:

What is a Test Case?

A test case describes an input, action, or event and an expected response, to determine if a feature of a software application is working correctly.

(Or)

Test case is a description of what to be tested, what

data to be given and what actions to be done to check the actual result against

the expected result.

What are the items of Test Case?

Test case items are:

·

Test

Case Number

·

Test

Case Name

·

FRS

number

·

Test Data

·

Pre-condition

·

Description(Steps)

·

Expected

Result

·

Actual

Result

·

Status

(Pass/Fail)

·

Remarks

·

Defect

id

Can this Test Cases reusable?

Yes, Test cases can be reusable.

Test Cases developed for functionality/Performance testing, these can be used for Unit/Integration/System/Regression testing and performance testing with few modifications.

What are the characteristics of good test case?

A good test case should have the following:

TC should start with “what you are testing”.

TC should be independent.

TC should catch the bugs.

TC should be uniform.

e.g. <Action Buttons> “Links”…

Are there any issues to be considered?

Yes there are few issues:

All the TC’s should be traceable.

There should not be too many duplicate test cases.

Out dated test cases should be cleared off.

All the test cases should be executable.

Requirement Document Sample:

http://web.cse.ohio-state.edu/~bair.41/616/Project/Example_Document/Req_Doc_Example.html

|

TC ID |

Pre-condition |

Description(steps) |

Expected Result |

Actual Result |

Status |

Remarks |

|

Unique Test case Number |

Condition to satisfied |

1.What to be tested 2.What data to provided 3.What action to be done |

As per FRS |

System Response |

Pass or Fail |

If any |

|

Yahoo-001 |

Yahoo web page should

displayed |

1.check inbox is

displayed 2. User ID/PW 3.Click on submit |

System should mail box |

System response |

|

|

Usability Test cases :

i.

These

are general for all kinds of pages of the Application

o

Test

Case 1: Verify spelling in every screen

o Test Case 2: Verify

color uniqueness in screens

o Test Case 3: Verify

font uniqueness in screens

o

Test

Case 4: Verify size uniqueness in every screen

o

Test

Case 6: Verify alignment

o

Test

Case 7: Verify name spacing uniqueness in every screen.

o

Test

Case 8: Verify space uniqueness in between controls.

o Test Case 9: Verify

contrast in between controls.

o

Test

Case10: Verify related Objects grouping

o

Test

Case11: Verify Group edges/boundaries

o

Test

Case12: Verify positions of multiple data objects in screens.

o

Test

Case13: Verify scroll bars of screens.

o

Test

Case14: Verify the correctness of image icons

o

Test

Case15: Verify tool tips.

o

Test

Case16: Verify full forms of shortcuts (Eg: Abbreviations)

o

Test

Case17: Verify shortcut keys in keyboard.

o Test case title 1: Verify card insertion

o Test case title 2: Verify

operation with wrong angle of card insertion.

o Test case title 3: verify

operation with invalid card insertion. (Eg: Scratches on

o card, trecken card, other bank

cards, time expired cards, etc,)

o Test case title 4: Verify

language selection

o Test case title 5: verify PIN no.

entry

o Test case title 6: verify

operation with wrong PIN no.

o Test case title 7: verify

operation when PIN no. is entered wrongly 3 consecutive

o Test case title 8: verify Account

type selection.

o Test case title 9: verify

operation when wrong Account type is selected.

o Test case title10: verify

withdrawal option selection.

o Test case title11: verify Amount

entry

o Test case title12: verify

operation with wrong denominations of Amount

o Test case title13: verify

withdrawal operation success. (Correct Amount, Correct

o Receipt & able to take cards

back)

o Test case title14: Verify

operation when the amount for withdrawal is less then

o Test case title15: Verify

operation when the network has problem.

o Test case title16: verify

operation when the ATM is lack of amount.

o Test case title17: Verify

operation when the amount for withdrawal is less than

o Test case title18. Verify

operation when the current transaction is greater than no.

o of transactions limit per day.

o Test case title19: Verify cancel

after insertion of card.Page 34

o Test case title20: Verify cancel

after language selection.

o Test case title21: Verify cancel

after PIN no. entry.

o Test case title22: Verify cancel

after account type selection.

o Test case title23: Verify cancel

after withdrawal option selection.

o

Test

case title24: Verify cancel after amount entry (Last Operation)

o Test case title 2: verify shutdown option selection using Alt+F4.

o Test case title 3: verify shutdown option selection using run command.

o Test case title 4: verify shutdown operation.

o Test case title 5: verify shutdown operation when a process is running.

--------------------------------------------------------------------------------

ii.

Prepare

test case titles for washing machine operation.

o Test case title 1: Verify Power

supply

o Test case title 2: Verify door

open

o Test case title 3: verify water

filling with detergent

o Test case title 4: verify cloths

filling

o Test case title 5: verify door

close

o Test case title 6: verify door

close with cloths overflow.

o Test case title 7: verify washing

settings selection

o Test case title 8: verify washing

operation

o Test case title 9: verify washing

operation with lack of water.

o Test case title10: verify washing

operation with cloths overload

o Test case title11: verify washing

operation with improper settings

o Test case title12: verify washing

operation with machinery problem.

o Test case title13: verify washing

operation due to water leakage through door.

o Test case title14: Verify washing

operation due to door open in the middle of the

o

Test

case title15: verify washing operation with improper power.

Requirements formats: use cases and user stories

Since we have to make functional and nonfunctional requirements understandable for all stakeholders, we must capture them in an easy-to-read format. The two most typical formats are use cases and user stories.

Use cases

Use cases describe the interaction between the system and external users that leads to achieving particular goals.

Each use case includes three main elements:

Actors. These are the external users that interact with the system.

System. The system is described by functional requirements that define the intended behavior of the product.

Goals. The purposes of the interaction between the users and the system are outlined as goals.

There are two ways to represent use cases: a use case specification and a use case diagram.

A use case specification represents the sequence of events and other information related to this use case. A typical use case specification template includes the following information:

- Description,

- Pre- and Post- interaction condition,

- Basic interaction path,

- Alternative path, and

- Exception path.

A use case diagram doesn’t contain a lot of details. It shows a high-level overview of the relationships between actors, different use cases, and the system.

The use case diagram includes the following main elements.

- Use cases. Usually drawn with ovals, use cases represent different interaction scenarios that actors might have with the system (log in, make a purchase, view items, etc.).

- System boundaries. Boundaries are outlined by the box that groups various use cases in a system.

- Actors. These are the figures that depict external users (people or systems) that interact with the system.

- Associations. Associations are drawn with lines showing different types of relationships between actors and use cases.

A use case diagram doesn’t contain a lot of details. It shows a high-level overview of the relationships between actors, different use cases, and the system.

The use case diagram includes the following main elements.

- Use cases. Usually drawn with ovals, use cases represent different interaction scenarios that actors might have with the system (log in, make a purchase, view items, etc.).

- System boundaries. Boundaries are outlined by the box that groups various use cases in a system.

- Actors. These are the figures that depict external users (people or systems) that interact with the system.

- Associations. Associations are drawn with lines showing different types of relationships between actors and use cases.

User stories vs epics vs tasks

User stories

A user story is a documented description of a software functionality seen from the end-user perspective. The user story describes what exactly the user wants the system to do. In Agile projects, user stories are organized in a backlog. Currently, user stories are considered the best format for backlog items.

A typical user story looks like this:

As a <type of user>, I want <some goal> so that <some reason>.

Example:

As an admin, I want to add product descriptions so that users can later view these descriptions and compare the products.

User stories must be accompanied by acceptance criteria. These are the conditions the product must satisfy to be accepted by a user, stakeholders, or a product owner.

Each user story must have at least one acceptance criterion. Effective acceptance criteria must be testable, concise, and completely understood by all team members and stakeholders. We can write them as checklists, in plain text, or using the Given/When/Then format.

Here’s an example of the acceptance criteria checklist for a user story describing a search feature.

- A search field is available on the top bar.

- A search starts when the user clicks Submit.

- The default placeholder is a grey text Type the name.

- The placeholder disappears when the user starts typing.

- The search language is English.

- The user can type no more than 200 symbols.

- It doesn’t support special symbols. If the user has typed a special symbol in the search input, it displays the warning message: Search input cannot contain special symbols.

Finally, all user stories must fit the INVEST quality model:

- I – Independent

- N – Negotiable

- V – Valuable

- E – Estimable

- S – Small

- T – Testable

Independent. You can schedule and implement each user story separately. It’s very helpful if you employ continuous integration processes.

Negotiable. All parties agree to prioritize negotiations over specification. Details will be created constantly during development.

Valuable. A story must be valuable to the customer. You should ask yourself from the customer’s perspective “why” you need to implement a given feature.

Estimable. A quality user story can be estimated. It will help a team schedule and prioritize the implementation. The bigger the story is, the harder it is to estimate it.

Small. Good user stories tend to be small enough to plan for short production releases. Small stories allow for more specific estimates.

Testable. If we can test a story, it’s clear and good enough. Tested stories mean that requirements are done and ready for use.

A user story is a documented description of a software functionality seen from the end-user perspective. The user story describes what exactly the user wants the system to do. In Agile projects, user stories are organized in a backlog. Currently, user stories are considered the best format for backlog items.

A typical user story looks like this:

As a <type of user>, I want <some goal> so that <some reason>.

Example:

As an admin, I want to add product descriptions so that users can later view these descriptions and compare the products.

User stories must be accompanied by acceptance criteria. These are the conditions the product must satisfy to be accepted by a user, stakeholders, or a product owner.

Each user story must have at least one acceptance criterion. Effective acceptance criteria must be testable, concise, and completely understood by all team members and stakeholders. We can write them as checklists, in plain text, or using the Given/When/Then format.

Here’s an example of the acceptance criteria checklist for a user story describing a search feature.

- A search field is available on the top bar.

- A search starts when the user clicks Submit.

- The default placeholder is a grey text Type the name.

- The placeholder disappears when the user starts typing.

- The search language is English.

- The user can type no more than 200 symbols.

- It doesn’t support special symbols. If the user has typed a special symbol in the search input, it displays the warning message: Search input cannot contain special symbols.

Finally, all user stories must fit the INVEST quality model:

- I – Independent

- N – Negotiable

- V – Valuable

- E – Estimable

- S – Small

- T – Testable

Independent. You can schedule and implement each user story separately. It’s very helpful if you employ continuous integration processes.

Negotiable. All parties agree to prioritize negotiations over specification. Details will be created constantly during development.

Valuable. A story must be valuable to the customer. You should ask yourself from the customer’s perspective “why” you need to implement a given feature.

Estimable. A quality user story can be estimated. It will help a team schedule and prioritize the implementation. The bigger the story is, the harder it is to estimate it.

Small. Good user stories tend to be small enough to plan for short production releases. Small stories allow for more specific estimates.

Testable. If we can test a story, it’s clear and good enough. Tested stories mean that requirements are done and ready for use.

Wireframes. Wireframes are low-fidelity graphic structures of a website or an app. They help map different product pages with sections and interactive elements.

Mockups. Wireframes can be turned into mockups – visual designs that convey the look and feel of the final product. Eventually, mockups can become the final design of the product.

Prototypes. The next stage is a product prototype that allows teams and stakeholders to understand what’s missing or how the product may be improved. Often, after interacting with prototypes, the existing list of requirements is adjusted.

Test Case Execution

Execution

and execution results plays a vital role in the testing. Each and every

activity

Should

have proof.

The following activities should be taken care:

1. Number of test cases executed.

2. Number of defects found.

3. Screen shoots of successful and failure

executions should be taken in word document.

4. Time taken to execute.

5. Time wasted due to the unavailability of

the system.

Test Case Execution Process:

Take the Test Case document

ß

Check the unavailability of

application

ß

Implementation of Test Cases

Inputs:

-Test Cases

-System Availability

-Data Availability

Process:

-Test it.

output:

-Raise the Defects

-Take screen shot & save it.

| Test Scenario | Test Case |

|---|---|

| A test scenario is a high-level description of a test. | A test case is a detailed set of instructions for a test. |

| It outlines what needs to be tested. | It specifies how to execute the test. |

| Test scenarios are created based on requirements and user stories. | Test cases are derived from test scenarios or directly from the software’s requirements. |

| They provide a broader view of what is to be tested. | They provide specific steps and data to verify a particular functionality. |

| Test scenarios may encompass multiple test cases. | Test cases are individual steps within a test scenario. |

| Test scenarios focus on business needs and end-to-end scenarios. | Test cases focus on individual testable aspects or conditions. |

| They are less detailed and may not have specific test data. | They include specific test data and expected results. |

| Test scenarios serve as a basis for creating test cases. | Test cases are executed during the testing process. |

| Their purpose is to ensure that all major functionalities are covered. | Their purpose is to validate the correctness and reliability of the software |

Defect

Handling

What is Defect?

In computer technology, a Defect is a flaw or imperfection in a software work product.

(or)

When the expected result does not match with the application actual result it is termed as Defect.

The defect that causes harm is the defect which might result in injuries, health issues, or death.

-A usability defect that results in user dissatisfaction: This will not harm the user, it will make him dissastified

-A defect that causes slow response time when running reports: This will not harm the user unless these reports are used inside a critical place like a hospital operations room

-A defect that causes raw sewage to be dumped into the ocean: This will harm users because polluting the ocean has a direct harm on humans' health

-A regression defect that causes the desktop window to display in green: This will not harm the user, it might annoy him if he doesn't love the green color

It's your Salary day and your Salary credited by your company and it will redited to your colleague, not to you. (Bug in Banking system)

🤯You ordered Pizza and coke for your birthday party for 5 friends, waiting for it delivery and you got only coke, not pizza.

(Final order shows only the coke to the Pizza shop without the pizza order in the list)

😠You have subscribed to a OTT platform to watch a exciting Cricket match, but you see System error without the live match.

We work to prevent and report all these issues before it happens to you in your real life..... We are Software Testers who works to ensuring Quality preventing bugs🐞 in applications.

Latent Defect is one which has been in the system for a long time; but is discovered now. i.e. a defect which has been there for a long time and should have been detected earlier is known as Latent Defect. One of the reasons why Latent Defect exists is because exact set of conditions haven’t been met.

- Latent bug is an existing uncovered or unidentified bug in a system for a

period of time. The bug may have one or more versions of the software and might

be identified after its release.

- The problems will not cause the damage currently, but wait to reveal

themselves at a later time.

- The defect is likely to be present in various versions of the software and

may be detected after the release.

E.g. February has 28 days. The system could have not considered the leap year

which results in a latent defect

- These defects do not cause damage to the system immediately but wait for a

particular event sometime.

to cause damage and show their presence.

Masked defect: hides the other defect, which is not detected at a given point of time. It means there is an existing defect that is not caused for reproducing another defect.

Masked defect hides other defects in the system.

E.g. There is a link to add employee in the system. On clicking this link you can also add a task for the employee. Let’s assume, both the functionalities have bugs. However, the first bug (Add an employee) goes unnoticed. Because of this the bug in the add task is masked.

E.g. Failing to test a subsystem, might also cause not testing other parts of it which might have defects but remain unidentified as the subsystem was not tested due to its own defects

The defects which

we are unable to find during the system testing or UAT and it occurs in

production it is called defect leakage.

It is calculated as. (Number

ofdefects slipped)/(Number of defects received-Number

of defectswithdrawn)*100.

Example: In

production the customer raises 21 issues, during your tests 267 Issues were

reported but there were 17 invalid defects (p.e. because of wrong tests?

mistake by tester, error in testenvironment...)

Then your Defect Leakage

Ratio would be:

[21/(267-17)] x 100 = 8,4%

What is Defect Density?

Defect Density is

the number of defects confirmed in software/module during a specific period

of operation or development divided by the size of the

software/module. It enables one to decide if a piece of software is ready to be

released.

Defect density is

counted per thousand lines of code also known as KLOC.

Formula to

measure Defect Density:

- Defect Density = Defect count/

size of the release

Size of release can

be measured in terms of line of code (LoC).

What is a Defect Age?

The Time Gap between the date of detection & the date of closure of a defect termed as Defect Age

What is the Showstopper Defect?

A Defect that doestnot permit testing to continue further is called Showstopper defect.

Who can report a Defect?

Anyone who has involved in s/w development

life cycle and who is using the s/w can report a Defect. In most of the cases

Testing Team reports defects.

A short list of people expected to report bugs:

Testers/QA Engineers

Developers

Technical Support

End Users

Sales and Marketing Engineers

Root Cause: A source of a defect such that if it is removed, the occurrence of the defect type is decreased or removed.

Root Cause Analysis: An analysis technique aimed at identifying the root causes of defects. By directing corrective measures of root causes, it is hoped that the likelihood of defect occurrence will be minimized.

root cause analysis can lead to process improvements that prevent a significant number of future defects from being introduced.

For example, suppose incorrect interest payments, due to a single line of incorrect code, result in customer complaints. The defective code was written for a user story which was ambiguous, due to the product owner’s misunderstanding of how to calculate interest. If a large percentage of defects exist in interest calculations, and these defects have their root cause in similar misunderstandings, the product owners could be trained in the topic of interest calculations to reduce such defects in the future.

Difference Between Bug And Defect

| Aspect | Bug | Defect |

|---|---|---|

| Terminology | Informal term | Formal term |

| Usage | Widely used by developers and users to refer to software issues | Primarily used in the context of software testing and quality assurance |

| Origin | Arises from various sources, such as coding errors, design flaws, or external factors | Arises due to discrepancies between the actual behavior and specified requirements or design |

| Context | Used in general discussions and conversations about software problems | Used specifically during the testing phase to identify deviations from expected behavior |

| Severity | Can range from minor glitches to critical errors affecting functionality | Usually associated with the failure to meet specified requirements and considered as deviations from expected behavior |

| Importance | Addressed during development and maintenance phases | Identified and documented during testing and resolved before release |

| Resolution | Can be fixed without being documented as a formal issue | Typically documented in a defect tracking system and must be resolved and validated |

| Formality | Often resolved informally or through informal communication | Requires a formal process to track, manage, and resolve defects |

Severity and Priority - What is the Difference?

Both Severity and Priority are attributes of a defect and should be provided in the bug report. This information is used to determine how quickly a bug should be fixed.

Severity vs. Priority

Severity of a defect is related to how severe a bug is. Usually the severity is defined in terms of financial loss, damage to environment, company’s reputation and loss of life.

Priority of a defect is related to how quickly a bug should be fixed and deployed to live servers. When a defect is of high severity, most likely it will also have a high priority. Likewise, a low severity defect will normally have a low priority as well.

Although it is recommended to provide both Severity and Priority when submitting a defect report, many companies will use just one, normally priority.

In the bug report, Severity and Priority are normally filled in by the person writing the bug report, but should be reviewed by the whole team.

High Severity - High Priority bug

This is when major path through the application is broken, for example, on an eCommerce website, every customers get error message on the booking form and cannot place orders, or the product page throws a Error 500 response.

High Severity - Low Priority bug

This happens when the bug causes major problems, but it only happens in very rare conditions or situations, for example, customers who use very old browsers cannot continue with their purchase of a product. Because the number of customers with very old browsers is very low, it is not a high priority to fix the issue.

High Priority - Low Severity bug

This could happen when, for example, the logo or name of the company is not displayed on the website. It is important to fix the issue as soon as possible, although it may not cause a lot of damage.

Low Priority - Low Severity bug

For cases where the bug doesn’t cause disaster and only affects very small number of customers, both Severity and Priority are assigned low, for example, the privacy policy page take a long time to load. Not many people view the privacy policy page and slow loading doesn’t affect the customers much.

The above are just examples. It is the team who should decide the Severity and Priority for each bug.

Defect Tracking Tools:

Bug Tracker—BSL proprietary Tools

Rational Clear Quest

Bugzilla

JIRA

Quality Center/ALM

Rally

JIRA Tutorial:

A Complete Guide for Beginners

What is JIRA?

track, and release great software.

JIRA is a tool developed by Australian Company Atlassian. It is used for bug tracking, issue tracking, and project management. The name "JIRA" is actually inherited from the Japanese word "Gojira" which means "Godzilla".

The basic use of this tool is to track issue and bugs related to your software and Mobile apps. It is also used for project management. The JIRA dashboard consists of many useful functions and features which make handling of issues easy.

Under Issues options

click on search for issues that will open a window from where you can

locate your issues and perform multiple functions.

JIRA WORKFLOW

-----------------------------------------------------------------------------------------------------

ALM - Application lifecycle management tool. It is owned by MicroFocus company

It is used to track the tasks, track the defects and project management. This is a commercial tool

Tabs in ALM:

1)Dashboard

2)Management

3)Requirements

4)Testing

->Testplan --here we will create testcases based on requirements

->Testlab -->Here we will execute the testcases

5)Defect -->it is used to create the defects/track the defect

BUG LIFE CYCLE:

Defect Life Cycle helps in handling defects efficiently. This DLC will help the users to know the status of the defect

---------------------------------------------------------------------------------

===================================================================

Types of Software Testing

Software testing is generally classified into two main broad categories: functional testing and non-functional testing. There is also another general type of testing called maintenance testing.

Manual vs. automated testing

At a high level, we need to make the distinction between manual and automated tests. Manual testing is done in person, by clicking through the application or interacting with the software and APIs with the appropriate tooling. This is very expensive as it requires someone to set up an environment and execute the tests themselves, and it can be prone to human error as the tester might make typos or omit steps in the test script.

Automated tests, on the other hand, are performed by a machine that executes a test script that has been written in advance. These tests can vary a lot in complexity, from checking a single method in a class to making sure that performing a sequence of complex actions in the UI leads to the same results. It's much more robust and reliable than automated tests – but the quality of your automated tests depends on how well your test scripts have been written.

Automated testing is a key component of continuous integration and continuous delivery and it's a great way to scale your QA process as you add new features to your application. But there's still value in doing some manual testing with what is called exploratory testing

1. Functional Testing

Functional testing involves the testing of the functional aspects of a software application. When you’re performing functional tests, you have to test each and every functionality. You need to see whether you’re getting the desired results or not.